Websockets in Kubernetes

2024-05-24 9 min

Legacy code has a funny way of sneaking back into your life. A few weeks ago, I was working on a service that had been migrated from AWS Lambda to Kubernetes. At first glance, everything looked straightforward—until I met File Validator.

File Validator does one job: it checks the format of files uploaded through a web client. It also reads files from an FTP server—but that’s a story for another time. Sounds simple enough. But here’s the twist: the service uses a WebSocket to send validation results back to the client.

Now, why would anyone choose WebSockets for a use case like this?

I wasn’t there when that decision was made, but I can take a good guess:

- Asynchronous processing — Validation takes a few seconds, so it made sense to offload it and notify the client when done.

- No persistence needed — Errors didn’t need to be stored, so pushing them directly to the client kept things lightweight.

Honestly, it probably was a smart choice back then.

Fast forward to the present: the entire architecture has been migrated to Kubernetes. Services are containerized, running in pods, neatly managed, and—importantly—not a single one of them is using WebSockets. Except for File Validator.

So now we had a challenge: how do we keep this WebSocket-based communication working smoothly in an environment that wasn’t built with that in mind?

I won’t dive too deep into the technical weeds (at least not in this post), but let’s just say we managed to preserve the WebSocket approach.

The problem

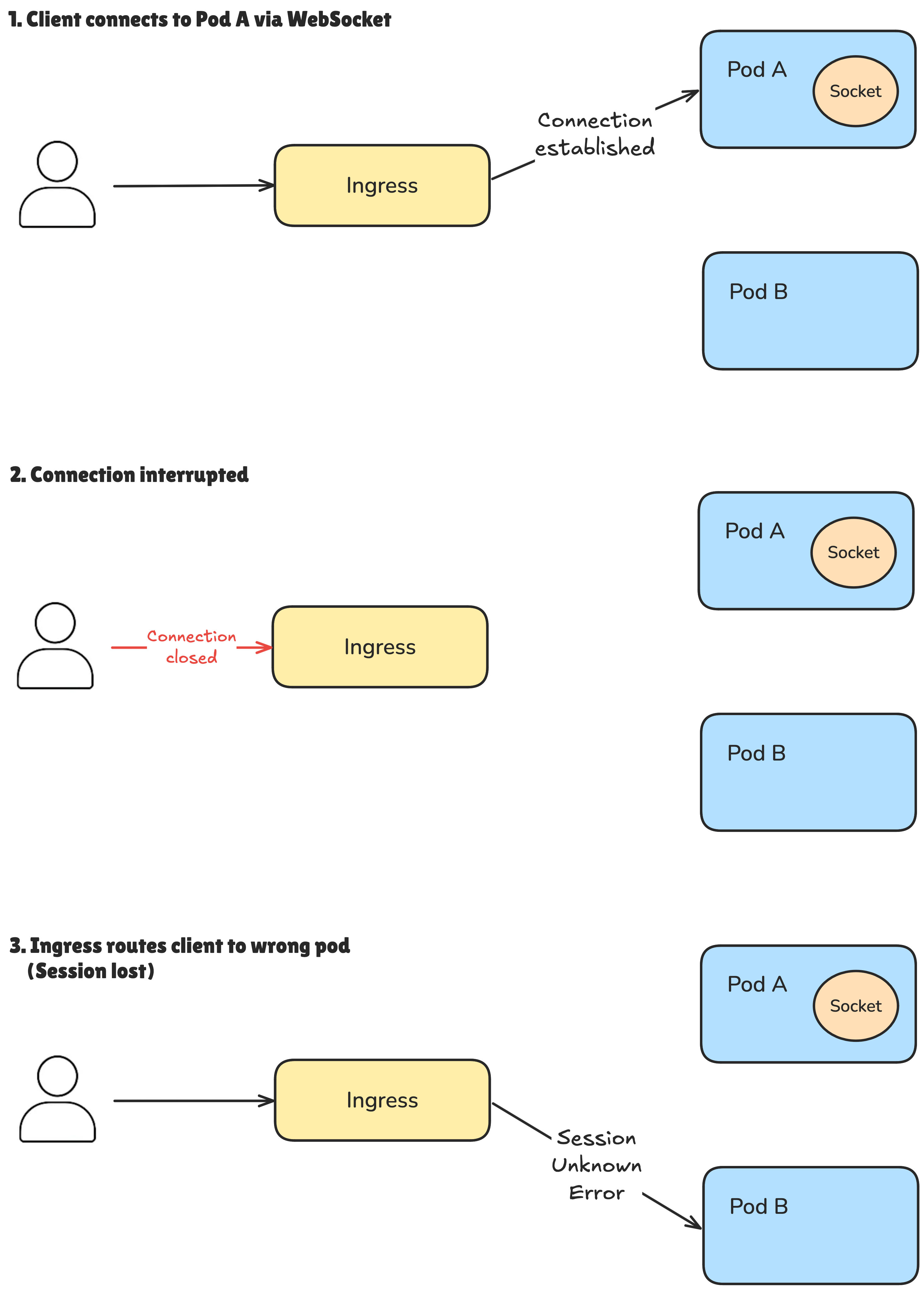

As part of our File Validator service, which handles file uploads from users, we ran into a significant scalability issue: the service couldn’t reliably run with more than one pod.

The root of the problem? WebSocket connections.

When multiple pods were deployed, WebSocket sessions couldn’t be preserved across reconnections. The Ingress controller had no way of consistently routing returning clients to the same pod that originally established the WebSocket connection. As a result, users experienced broken sessions and interrupted file validations.

Up to that point, this limitation hadn’t caused much trouble—File Validator’s workload was light enough to be handled by a single pod. But with projected company growth on the horizon, we knew this would soon become a serious bottleneck. The inability to scale horizontally meant that what was once “good enough” would quickly turn into a critical weakness.

Sticky sessions

During a WebSocket’s lifespan, it’s common for the connection to drop and reconnect several times—network hiccups, browser behavior, or mobile connections can all cause this. For the session to work correctly, the client needs to reconnect to the same pod that initiated the original session—at least until that session is explicitly closed.

And here’s where the main challenge lies: how do you ensure a returning client always reaches the same pod in a distributed, multi-node environment like Kubernetes?

The answer is: sticky sessions.

In short, sticky sessions (also known as session affinity or session persistence) allow the Ingress controller to route a client consistently to the same pod that originally handled their request. This is crucial for maintaining active WebSocket connections without losing state.

That means the solution needs to be applied at the Ingress level—not in the application itself.

💡 Sticky sessions aren’t exclusive to Kubernetes. This pattern has been used for years in load-balanced environments to maintain client affinity across multi-node infrastructures.

There are typically two ways to implement sticky sessions:

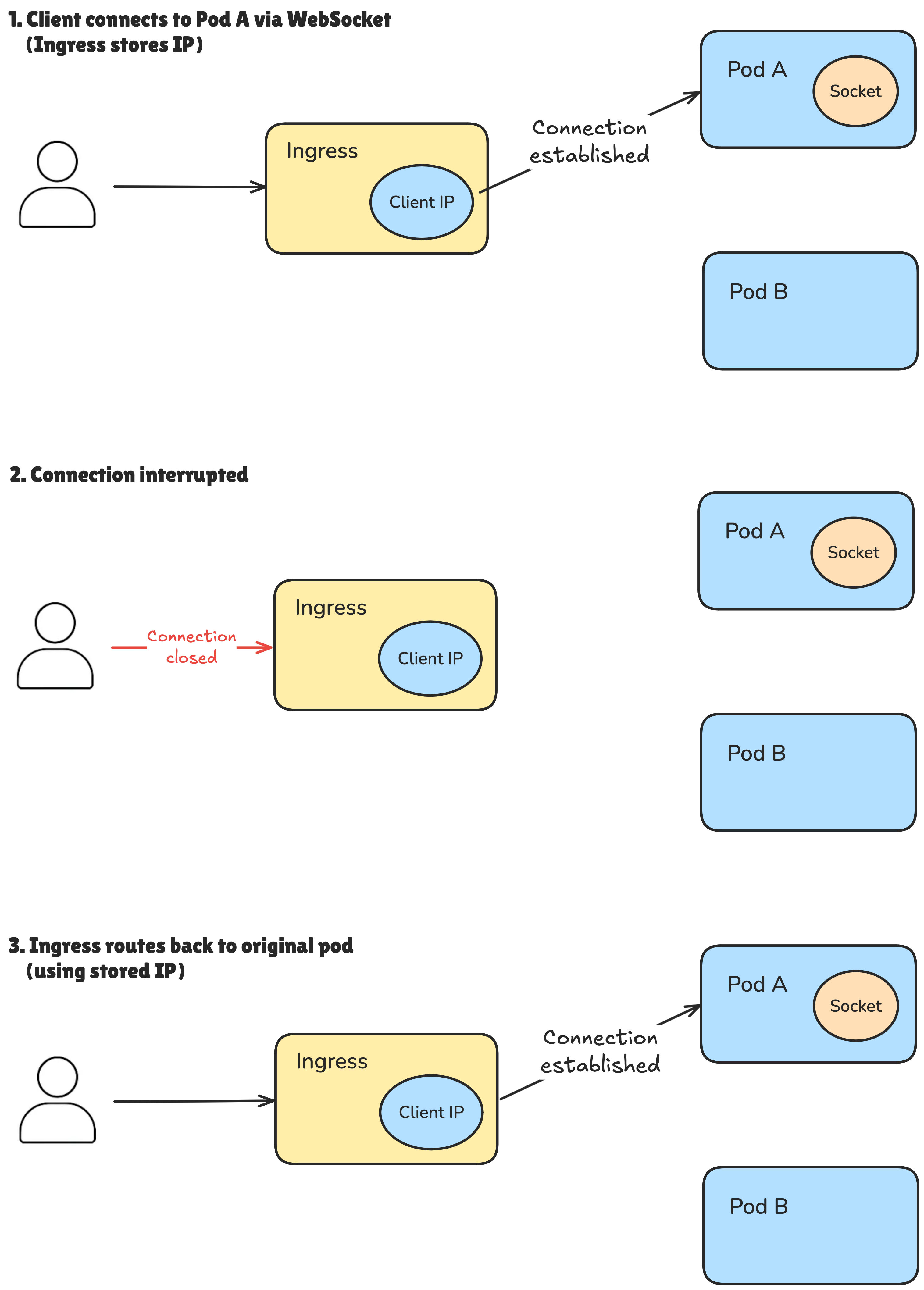

- By IP address – Clients are routed based on their source IP.

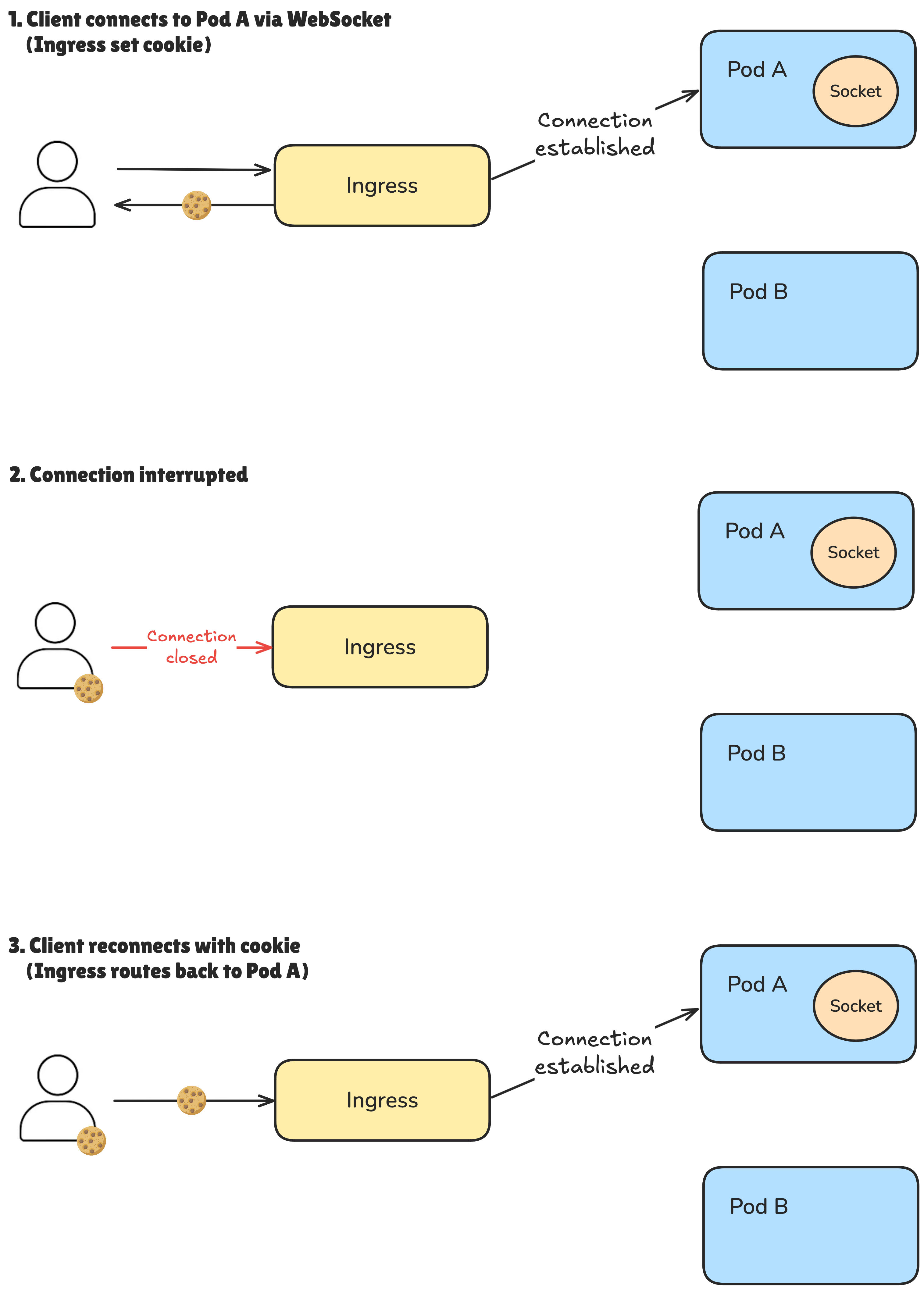

- By cookies – A cookie is issued to identify the session and ensure consistent routing.

IP based

The IP-based solution maintains session affinity using the client’s IP address. This means that all connections originating from the same IP will consistently be routed to the same pod.

To implement this, the following configuration changes are needed in your Ingress resource:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: your-ingress

namespace: your-namespace

annotations:

nginx.ingress.kubernetes.io/configuration-snippet: |

set $forwarded_client_ip "";

if ($http_x_forwarded_for ~ "^([^,]+)") {

set $forwarded_client_ip $1;

}

set $client_ip $remote_addr;

if ($forwarded_client_ip != "") {

set $client_ip $forwarded_client_ip;

}

nginx.ingress.kubernetes.io/upstream-hash-by: "$client_ip"

The main advantage of this approach over cookie-based session persistence is that it doesn’t require any support or cooperation from the client. The Ingress controller handles all session routing based on the $client_ip variable defined in the nginx.ingress.kubernetes.io/upstream-hash-by ↗️ annotation.

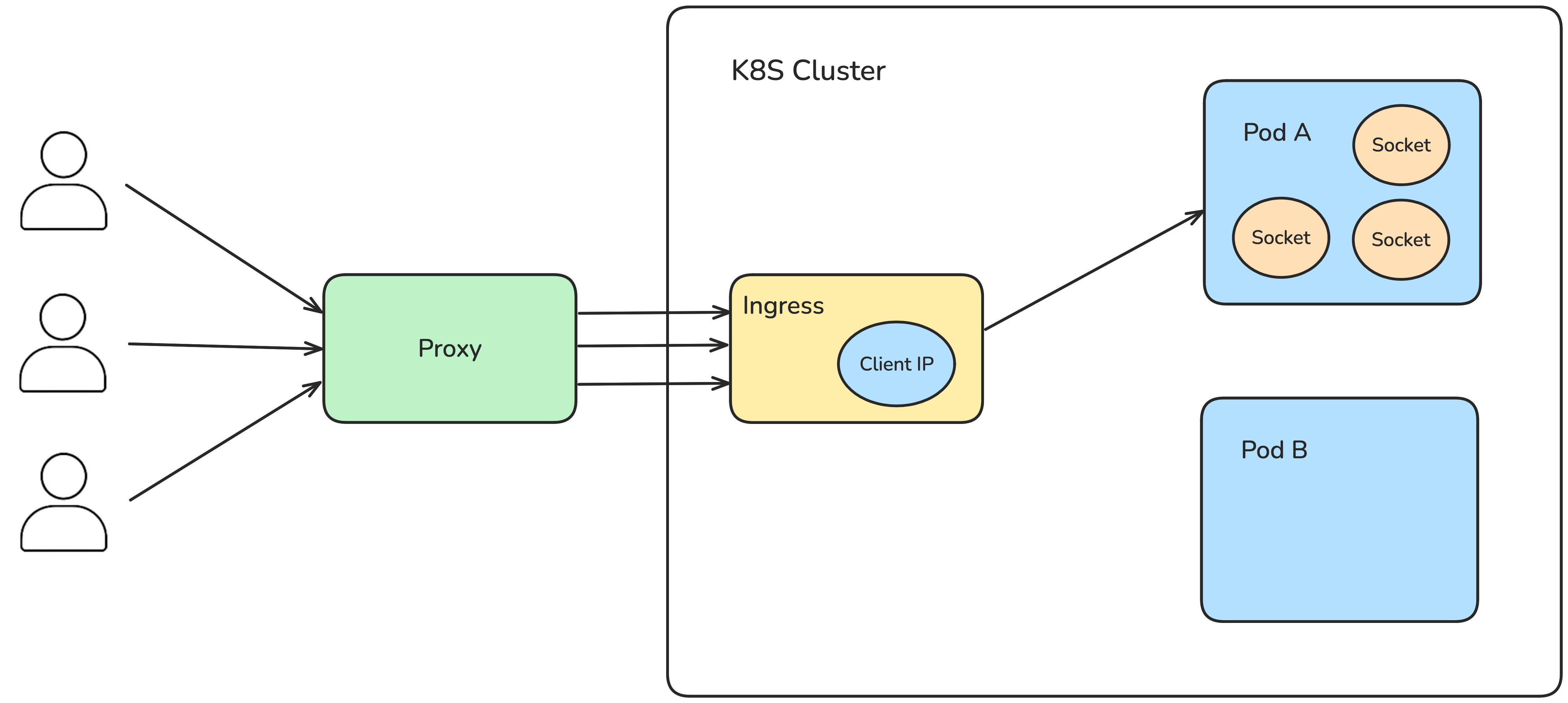

However, relying on IP addresses comes with a trade-off. The IP used may not be the actual client’s IP but rather that of a proxy or NAT gateway—especially in enterprise or mobile networks. In such cases, all users behind the same proxy may get routed to the same pod, which effectively disables load balancing for those users.

To reduce the impact of this limitation, the snippet in nginx.ingress.kubernetes.io/configuration-snippet attempts to extract the first IP from the X-Forwarded-For header. This helps ensure a more accurate client IP in environments where the request has passed through multiple reverse proxies or API gateways.

Cookie based

A more reliable method is using cookies. As the name suggests, the session information is stored directly in the client’s browser as a cookie. This approach creates a genuine, one-to-one mapping between the client and the specific pod. Unlike the IP-based method that depends solely on the client’s IP address stored by the ingress controller.

To implement cookie-based sticky sessions, you can add these annotations to your ingress configuration:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: your-ingress

namespace: your-namespace

annotations:

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/session-cookie-name: "sticky-session"

nginx.ingress.kubernetes.io/session-cookie-expires: "60000"

nginx.ingress.kubernetes.io/session-cookie-max-age: "60000"

These annotations instruct the ingress controller to store a cookie named sticky-session for one minute (60,000 milliseconds).

A practical approach to setting this cookie’s TTL (Time To Live) is aligning it with your longest WebSocket session duration. This way, sessions remain intact throughout active connections, but the client can freely reconnect to a different pod once the WebSocket has completed its lifecycle.

In my scenario, one minute proved to be more than sufficient.

Handling Different Domains

Another important consideration with cookie-based sessions is the fact that browsers won’t automatically share cookies across different domains. This is a fundamental security constraint enforced by CORS (Cross-Origin Resource Sharing).

To enable cross-domain cookie sharing, you need to configure both your client and your server accordingly.

On the client side, you must explicitly set the withCredentials option to true ↗️.

const socket = io("https://server-domain.com", { withCredentials: true });

On the server side, ensure you explicitly list allowed client domains in your CORS configuration. Note that using a wildcard (*) won’t work when credentials are involved:

const io = SocketIO(httpServer, {

cors: {

origin: ['https://client-domain.co'], // Explicitly allowed client domain

credentials: true,

},

});

While this example specifically addresses a WebSocket setup, the same CORS considerations apply equally to traditional HTTP servers.

Tradeoffs

Like any design decision, sticky sessions in Kubernetes come with tradeoffs.

Sticky sessions introduce a level of dependency between the client and the specific pod that handled the initial connection. This runs somewhat counter to the design philosophy of Kubernetes, where pods are ephemeral by nature—spun up or torn down dynamically based on demand or health.

In other words, stateful endpoints don’t play particularly well with Kubernetes’ architecture. And the core reason lies in the shutdown policy.

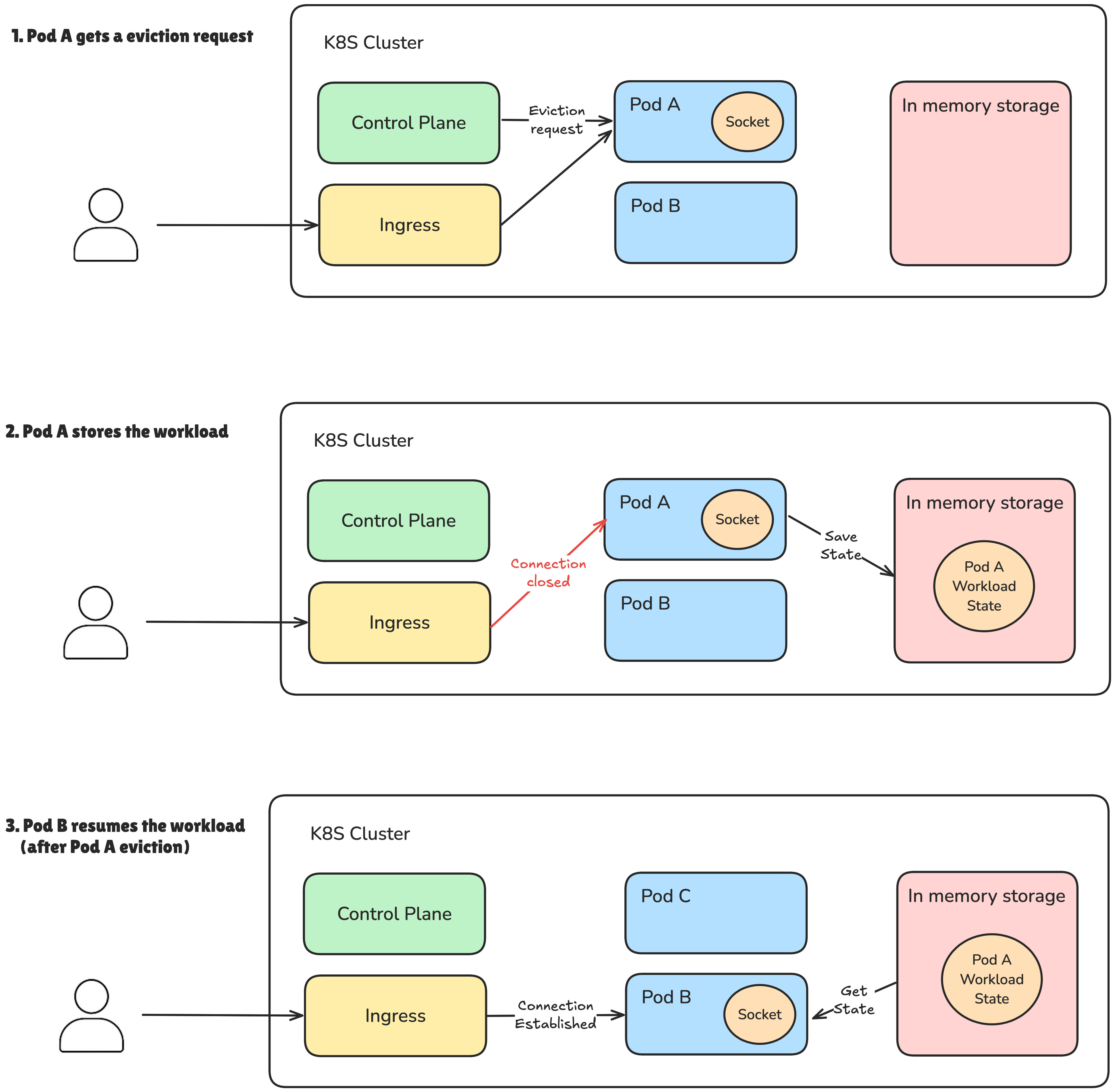

The shutdown policy defines the grace period a pod has to finish its active workloads before it’s terminated. During this period, the pod stops receiving new requests but is still allowed to complete any ongoing work.

That’s why it’s critical to ensure that any WebSocket session (or long-running task) completes within this grace period. If the session outlives the pod’s termination window, you risk cutting it off mid-process.

In those cases, it’s a good idea to implement a temporary storage or queuing mechanism so that another pod can resume and complete the task after eviction.

Conclusion

Before jumping into a solution, it’s important to take a step back and evaluate a few key aspects:

- How long does a typical WebSocket session last?

- What’s the duration of the pod shutdown grace period?

- Does the WebSocket workload need to be stored or recoverable?

- Where should the sticky session state live—on the ingress controller or the client?

In my case, the WebSocket connections are short-lived—around 20 seconds on average. That means there’s no real need for persisting session state, even when a pod gets evicted. The shutdown grace period is set to one minute, which gives the pod more than enough time to wrap things up.

So, in this scenario, sticky sessions are a perfectly safe choice for WebSockets—whether as a short-, mid-, or even long-term solution.

Future considerations

Sticky sessions are a valid way to keep WebSocket connections stable in Kubernetes, but they’re not perfectly aligned with the platform’s stateless design philosophy.

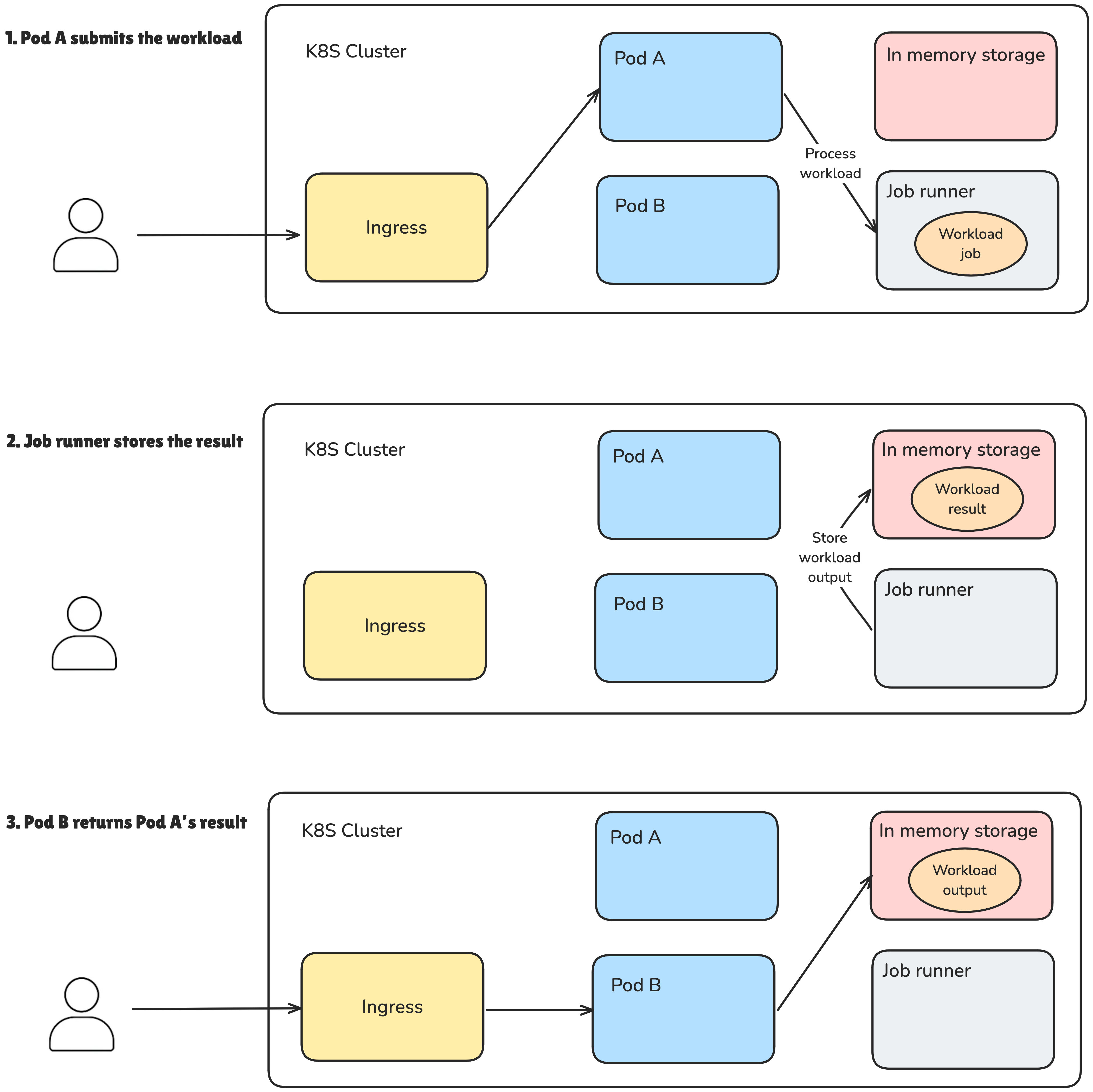

A more Kubernetes-friendly—and RESTful—approach would be to replace WebSockets with stateless HTTP requests.

In this pattern, the client triggers the workload via an HTTP request. The pod that receives it delegates the work to a background job. From there, the client polls any available pod for the result. Once the job completes, the result is temporarily stored and returned to the client by whichever pod handles that final request.

No session required. No affinity needed.

So, all things considered, in my case, sticky sessions provided a better ROI. The implementation was straightforward, and it solved the problem without needing to redesign the whole system.

But one thing is clear: stateful requests should be avoided whenever possible, especially if you want to stay aligned with how Kubernetes is designed to operate—ephemeral, stateless, and flexible by default.